import pandas as pd

from sklearn.preprocessing import MinMaxScaler, RobustScaler

import requests

from datetime import datetime

import tensorflow as tf

import seaborn as sns

import matplotlib.pyplot as plt

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import LabelBinarizer,OneHotEncoder,StandardScaler

from sklearn.compose import ColumnTransformer

from sklearn.model_selection import train_test_split

from sklearn.model_selection import TimeSeriesSplit

from sklearn.model_selection import GridSearchCV

from sklearn import svm

from xgboost import XGBRegressor

from sklearn.ensemble import RandomForestRegressor

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

from tensorflow.keras.metrics import MeanSquaredError

from tensorflow.keras import regularizers

import numpy as np

from sklearn.metrics import mean_squared_error

Preprocess Data¶

train = pd.read_csv('/content/drive/MyDrive/courses/HKUST/MSBD5001/project/data/group project/disney_shanghai.csv', parse_dates=['Time'])

test = pd.read_csv('/content/drive/MyDrive/courses/HKUST/MSBD5001/project/data/group project/disney_shanghai_test.csv',

names=['Time', 'Facility ID', 'Name', 'Wait time', 'Ride yype',

'Temperature', 'Max temperature', 'Min temperature', 'Humidity',

'Pressure', 'Wind degree', 'Wind speed', 'Cloud', 'Weather',

'Weather description'],

parse_dates=['Time'])

print(train.info())

print(test.info())

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 32024 entries, 0 to 32023

Data columns (total 17 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 Name 32024 non-null object

1 Ride type 32024 non-null object

2 Time 32024 non-null datetime64[ns, pytz.FixedOffset(480)]

3 Fastpass-avaliable 32024 non-null bool

4 Status 32024 non-null object

5 Wait time 14621 non-null float64

6 Weather 32024 non-null object

7 Weather description 32024 non-null object

8 Temperature 32024 non-null float64

9 Max temperature 32024 non-null float64

10 Min temperature 32024 non-null float64

11 Pressure 32024 non-null int64

12 Humidity 32024 non-null int64

13 Wind degree 32024 non-null float64

14 Wind speed 32024 non-null float64

15 Cloud 32024 non-null int64

16 Visibility 32024 non-null int64

dtypes: bool(1), datetime64[ns, pytz.FixedOffset(480)](1), float64(6), int64(4), object(5)

memory usage: 3.9+ MB

None

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 10449 entries, 0 to 10448

Data columns (total 15 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 Time 10449 non-null datetime64[ns, UTC]

1 Facility ID 10449 non-null object

2 Name 10449 non-null object

3 Wait time 4016 non-null float64

4 Ride yype 10449 non-null object

5 Temperature 10449 non-null int64

6 Max temperature 10449 non-null float64

7 Min temperature 10449 non-null float64

8 Humidity 10449 non-null int64

9 Pressure 10449 non-null int64

10 Wind degree 10449 non-null int64

11 Wind speed 10449 non-null float64

12 Cloud 10449 non-null int64

13 Weather 10449 non-null object

14 Weather description 10449 non-null object

dtypes: datetime64[ns, UTC](1), float64(4), int64(5), object(5)

memory usage: 1.2+ MB

None

show data

train = train[['Name', 'Time', 'Wait time', 'Weather', 'Temperature', 'Max temperature', 'Min temperature', 'Pressure', 'Humidity', 'Wind degree', 'Wind speed', 'Cloud']]

train.head(2)

| Name | Time | Wait time | Weather | Temperature | Max temperature | Min temperature | Pressure | Humidity | Wind degree | Wind speed | Cloud | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Camp Discovery | 2020-08-31 13:38:47.638019+08:00 | 0.0 | Clouds | 33.28 | 34.0 | 34.0 | 1009 | 66 | 110.0 | 5.0 | 75 |

| 1 | Challenge Trails at Camp Discovery | 2020-08-31 13:38:47.638035+08:00 | 10.0 | Clouds | 33.28 | 34.0 | 34.0 | 1009 | 66 | 110.0 | 5.0 | 75 |

show test

test = test[['Name', 'Time', 'Wait time', 'Weather', 'Temperature', 'Max temperature', 'Min temperature', 'Pressure', 'Humidity', 'Wind degree', 'Wind speed', 'Cloud']]

test.head(2)

| Name | Time | Wait time | Weather | Temperature | Max temperature | Min temperature | Pressure | Humidity | Wind degree | Wind speed | Cloud | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Challenge Trails at Camp Discovery | 2020-11-14 10:07:34+00:00 | 30.0 | Clouds | 18 | 18.0 | 18.0 | 1026 | 68 | 70 | 3.0 | 20 |

| 1 | Vista Trail at Camp Discovery | 2020-11-14 10:07:34+00:00 | 0.0 | Clouds | 18 | 18.0 | 18.0 | 1026 | 68 | 70 | 3.0 | 20 |

Change name into values¶

unique_names = train[['Name']].drop_duplicates()

unique_names['id'] = range(len(unique_names))

train = train.merge(unique_names, on='Name')

unique_names

| Name | id | |

|---|---|---|

| 0 | Camp Discovery | 0 |

| 1 | Challenge Trails at Camp Discovery | 1 |

| 2 | Vista Trail at Camp Discovery | 2 |

| 3 | Soaring Over the Horizon | 3 |

| 4 | “Once Upon a Time” Adventure | 4 |

| 5 | Alice in Wonderland Maze | 5 |

| 6 | Frozen: A Sing-Along Celebration | 6 |

| 7 | Hunny Pot Spin | 7 |

| 8 | Peter Pan’s Flight | 8 |

| 9 | Seven Dwarfs Mine Train | 9 |

| 10 | The Many Adventures of Winnie the Pooh | 10 |

| 11 | Voyage to the Crystal Grotto | 11 |

| 12 | Dumbo the Flying Elephant | 12 |

| 13 | Fantasia Carousel | 13 |

| 14 | TRON Lightcycle Power Run – Presented by Chevr... | 14 |

| 15 | Stitch Encounter | 15 |

| 16 | Jet Packs | 16 |

| 17 | Buzz Lightyear Planet Rescue | 17 |

| 18 | Siren's Revenge | 18 |

| 19 | Shipwreck Shore | 19 |

| 20 | Pirates of the Caribbean Battle for the Sunken... | 20 |

| 21 | Explorer Canoes | 21 |

| 22 | Eye of the Storm: Captain Jack’s Stunt Spectac... | 22 |

| 23 | Roaring Rapids | 23 |

| 24 | Ignite the Dream - A Nighttime Spectacular of ... | 24 |

| 25 | Mickey’s Storybook Express | 25 |

| 26 | Golden Fairytale Fanfare | 26 |

| 27 | TRON Realm, Chevrolet Digital Challenge | 27 |

| 28 | Rex’s Racer | 28 |

| 29 | Slinky Dog Spin | 29 |

| 30 | Woody’s Roundup | 30 |

| 31 | Marvel Universe | 31 |

| 32 | Club Destin-E | 32 |

| 33 | Color Wall | 33 |

| 34 | Avengers Training Initiative | 34 |

| 35 | Wave Hello to Your Favorite Mickey Avenue Char... | 35 |

| 36 | Mickey Avenue Kiss Goodnight | 36 |

| 37 | Adventurous Friends Exploration | 37 |

| 38 | Catch a Glimpse of Jack Sparrow | 38 |

| 39 | Hundred Acre Wood Character Sighting | 39 |

| 40 | Princess Balcony Greetings | 40 |

| 41 | Avengers Assemble at the E-Stage | 41 |

| 42 | Woody’s Rescue Patrol | 42 |

Dropna¶

train = train.dropna()

test = test.dropna()

Add busy label¶

def is_busy(time: int):

if time < 30:

return [1, 0, 0]

elif time >= 30 and time <= 70:

return [0, 1, 0]

else:

return [0, 0, 1]

Add weekend and public holiday¶

def hour_modify(x: datetime):

Early_Morning = [4,5,6,7]

Morning = [8,9,10,11]

Afternoon = [12,13,14,15]

Evening = [16,17,18,19]

Night = [20,21,22,23]

Late_Night = [0,1,2,3]

if x.hour in Early_Morning:

return 'Early_Morning'

elif x.hour in Morning:

return 'Morning'

elif x.hour in Afternoon:

return 'Afternoon'

elif x.hour in Evening:

return 'Evening'

elif x.hour in Night:

return 'Night'

else:

return 'Late_Night'

def add_holiday_and_weekend(df: pd.DataFrame, date_field_str='date') -> pd.DataFrame:

"""

Add holiday and weekend to the dataset

"""

new_df = df.copy()

new_df['IsWeekend'] = new_df[date_field_str].apply(lambda x:0 if x.weekday() in [0,1,2,3,4] else 1)

new_df['IsHoliday']=new_df[date_field_str].apply(lambda x:1 if (x.date().strftime('%Y-%m-%d') in [

'2020-01-01', '2020-01-24', '2020-01-24', '2020-01-25', '2020-01-26', '2020-01-27', '2020-01-28',

'2020-01-29', '2020-01-30', '2020-01-31', '2020-02-01', '2020-02-02', '2020-04-04', '2020-05-01',

'2020-05-02', '2020-05-03', '2020-05-04', '2020-05-05', '2020-06-25', '2020-06-26', '2020-06-27',

'2020-10-01', '2020-10-02', '2020-10-03', '2020-10-04', '2020-10-05', '2020-10-06', '2020-10-07',

'2020-10-08', '2020-10-31'])

or(x.weekday() in[6]) else 0)

return new_df

convert time zone

train['Time'] = train['Time'].dt.tz_localize(None)

print(train['Time'])

0 2020-08-31 13:38:47.638019

1 2020-08-31 14:09:47.630487

2 2020-08-31 14:26:10.487907

3 2020-08-31 14:29:48.273505

4 2020-08-31 15:09:11.331162

...

23808 2020-10-24 15:23:47.123270

23809 2020-10-24 16:25:44.947592

23810 2020-10-24 17:23:16.431926

23816 2020-10-25 10:26:28.625480

23817 2020-10-25 11:24:10.125045

Name: Time, Length: 14621, dtype: datetime64[ns]

test['Time'] = test['Time'].dt.tz_convert("Asia/Shanghai").dt.tz_localize(None)

print(test['Time'])

0 2020-11-14 18:07:34

1 2020-11-14 18:07:34

2 2020-11-14 18:07:34

3 2020-11-14 18:07:34

4 2020-11-14 18:07:34

...

10340 2020-12-01 19:07:47

10348 2020-12-01 19:07:47

10349 2020-12-01 19:07:47

10350 2020-12-01 19:07:47

10351 2020-12-01 19:07:47

Name: Time, Length: 4016, dtype: datetime64[ns]

Add public holidays

train = add_holiday_and_weekend(train, 'Time')

test = add_holiday_and_weekend(test, 'Time')

train['Hour modify'] = train['Time'].apply(hour_modify)

test['Hour modify'] = test['Time'].apply(hour_modify)

train['Is busy'] = train['Wait time'].apply(is_busy)

train.head(3)

| Name | Time | Wait time | Weather | Temperature | Max temperature | Min temperature | Pressure | Humidity | Wind degree | Wind speed | Cloud | id | IsWeekend | IsHoliday | Hour modify | Is busy | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Camp Discovery | 2020-08-31 13:38:47.638019 | 0.0 | Clouds | 33.28 | 34.00 | 34.00 | 1009 | 66 | 110.0 | 5.0 | 75 | 0 | 0 | 0 | Afternoon | [1, 0, 0] |

| 1 | Camp Discovery | 2020-08-31 14:09:47.630487 | 0.0 | Clouds | 33.80 | 35.56 | 35.56 | 1008 | 70 | 110.0 | 5.0 | 75 | 0 | 0 | 0 | Afternoon | [1, 0, 0] |

| 2 | Camp Discovery | 2020-08-31 14:26:10.487907 | 0.0 | Clouds | 34.11 | 35.56 | 35.56 | 1008 | 66 | 110.0 | 6.0 | 75 | 0 | 0 | 0 | Afternoon | [1, 0, 0] |

Plot Data¶

plt.figure(figsize=(6,4))

sns.boxplot('Wait time',data=train,orient='h',palette="Set3",linewidth=2.5)

plt.show()

/usr/local/lib/python3.6/dist-packages/seaborn/_decorators.py:43: FutureWarning: Pass the following variable as a keyword arg: x. From version 0.12, the only valid positional argument will be `data`, and passing other arguments without an explicit keyword will result in an error or misinterpretation.

FutureWarning

train[['Wait time', 'Temperature', 'Humidity', 'Wind degree', 'Wind speed', 'Cloud']].describe()

| Wait time | Temperature | Humidity | Wind degree | Wind speed | Cloud | |

|---|---|---|---|---|---|---|

| count | 14621.000000 | 14621.000000 | 14621.000000 | 14621.000000 | 14621.000000 | 14621.000000 |

| mean | 23.616374 | 24.423189 | 64.933862 | 127.520347 | 5.352968 | 45.041174 |

| std | 26.388862 | 4.075267 | 14.270916 | 119.544200 | 1.962247 | 31.870902 |

| min | 0.000000 | 14.800000 | 33.000000 | 0.000000 | 0.450000 | 0.000000 |

| 25% | 5.000000 | 21.570000 | 56.000000 | 30.000000 | 4.000000 | 20.000000 |

| 50% | 15.000000 | 23.930000 | 61.000000 | 70.000000 | 5.000000 | 40.000000 |

| 75% | 30.000000 | 26.900000 | 74.000000 | 180.000000 | 7.000000 | 75.000000 |

| max | 195.000000 | 35.050000 | 100.000000 | 360.000000 | 11.000000 | 100.000000 |

Train¶

train.columns

Index(['Name', 'Time', 'Wait time', 'Weather', 'Temperature',

'Max temperature', 'Min temperature', 'Pressure', 'Humidity',

'Wind degree', 'Wind speed', 'Cloud', 'id', 'IsWeekend', 'IsHoliday',

'Hour modify', 'Is busy'],

dtype='object')

def plot_history(history):

hist = pd.DataFrame(history.history)

hist['epoch'] = history.epoch

plt.figure()

plt.xlabel('Epoch')

plt.ylabel('Mean Abs Error [MPG]')

plt.plot(hist['epoch'], hist['mae'],

label='Train Error')

plt.plot(hist['epoch'], hist['val_mae'],

label = 'Val Error')

plt.legend()

plt.figure()

plt.xlabel('Epoch')

plt.ylabel('Mean Square Error [$MPG^2$]')

plt.plot(hist['epoch'], hist['mse'],

label='Train Error')

plt.plot(hist['epoch'], hist['val_mse'],

label = 'Val Error')

plt.legend()

plt.show()

plt.savefig('MSE.png')

def generate_training_data(data: pd.DataFrame, prediction_label, cat_vars=['id', 'IsWeekend','IsHoliday','Hour modify', 'Weather'],

num_vars=['Temperature', 'Pressure', 'Humidity', 'Cloud', 'Wind degree'],

should_reshape=True, should_split = True):

x = train.copy()

y = x[prediction_label].to_list()

y = np.array(y)

numeric_transformer=Pipeline(steps=[

('scaler', RobustScaler())])

categorical_transformer=Pipeline(steps=[

('oneHot',OneHotEncoder(sparse=False))])

preprocessor=ColumnTransformer(transformers=[

('num',numeric_transformer,num_vars),

('cat',categorical_transformer,cat_vars)])

data_transformed=preprocessor.fit_transform(x)

if should_split:

if should_reshape:

y = y.reshape(-1, 1)

scaler = MinMaxScaler()

scaled_y = scaler.fit_transform(y)

return train_test_split(data_transformed,scaled_y ,test_size=0.02,random_state=42), scaler

else:

return train_test_split(data_transformed,y ,test_size=0.02,random_state=42)

else:

return data_transformed, y

data, scaler=generate_training_data(train, 'Wait time')

X_train,X_test,y_train,y_test = data

print(y_train.shape)

print(X_train.shape)

(14328, 1)

(14328, 44)

Training by using DNN¶

early_stop = keras.callbacks.EarlyStopping(monitor='val_loss', patience=20)

model = keras.Sequential(

[

layers.Dense(64, activation="relu",

input_shape=(X_train.shape[1],),),

layers.Dense(512, activation="relu",

input_shape=(X_train.shape[1],),),

layers.Dropout(0.5),

layers.Dense(256, activation="relu",

input_shape=(X_train.shape[1],),),

layers.Dropout(0.5),

layers.Dense(128, activation="relu",

input_shape=(X_train.shape[1],),),

layers.Dense(y_train.shape[1] ),

]

)

opt = keras.optimizers.Adam(learning_rate=0.001)

model.compile(loss='mean_squared_error',

optimizer=opt, metrics=['mae', 'mse'])

history = model.fit(X_train, y_train, epochs=1000, callbacks=[early_stop], validation_split=0.2)

plot_history(history)

Epoch 1/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0127 - mae: 0.0778 - mse: 0.0127 - val_loss: 0.0115 - val_mae: 0.0670 - val_mse: 0.0115

Epoch 2/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0069 - mae: 0.0563 - mse: 0.0069 - val_loss: 0.0065 - val_mae: 0.0515 - val_mse: 0.0065

Epoch 3/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0058 - mae: 0.0510 - mse: 0.0058 - val_loss: 0.0060 - val_mae: 0.0485 - val_mse: 0.0060

Epoch 4/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0052 - mae: 0.0480 - mse: 0.0052 - val_loss: 0.0061 - val_mae: 0.0472 - val_mse: 0.0061

Epoch 5/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0052 - mae: 0.0470 - mse: 0.0052 - val_loss: 0.0055 - val_mae: 0.0464 - val_mse: 0.0055

Epoch 6/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0046 - mae: 0.0449 - mse: 0.0046 - val_loss: 0.0051 - val_mae: 0.0482 - val_mse: 0.0051

Epoch 7/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0046 - mae: 0.0439 - mse: 0.0046 - val_loss: 0.0045 - val_mae: 0.0434 - val_mse: 0.0045

Epoch 8/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0044 - mae: 0.0434 - mse: 0.0044 - val_loss: 0.0053 - val_mae: 0.0485 - val_mse: 0.0053

Epoch 9/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0042 - mae: 0.0423 - mse: 0.0042 - val_loss: 0.0054 - val_mae: 0.0442 - val_mse: 0.0054

Epoch 10/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0039 - mae: 0.0410 - mse: 0.0039 - val_loss: 0.0049 - val_mae: 0.0448 - val_mse: 0.0049

Epoch 11/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0040 - mae: 0.0410 - mse: 0.0040 - val_loss: 0.0041 - val_mae: 0.0422 - val_mse: 0.0041

Epoch 12/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0039 - mae: 0.0409 - mse: 0.0039 - val_loss: 0.0043 - val_mae: 0.0416 - val_mse: 0.0043

Epoch 13/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0037 - mae: 0.0399 - mse: 0.0037 - val_loss: 0.0041 - val_mae: 0.0415 - val_mse: 0.0041

Epoch 14/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0038 - mae: 0.0403 - mse: 0.0038 - val_loss: 0.0040 - val_mae: 0.0432 - val_mse: 0.0040

Epoch 15/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0034 - mae: 0.0390 - mse: 0.0034 - val_loss: 0.0039 - val_mae: 0.0398 - val_mse: 0.0039

Epoch 16/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0034 - mae: 0.0383 - mse: 0.0034 - val_loss: 0.0038 - val_mae: 0.0405 - val_mse: 0.0038

Epoch 17/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0034 - mae: 0.0382 - mse: 0.0034 - val_loss: 0.0038 - val_mae: 0.0391 - val_mse: 0.0038

Epoch 18/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0034 - mae: 0.0381 - mse: 0.0034 - val_loss: 0.0038 - val_mae: 0.0386 - val_mse: 0.0038

Epoch 19/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0032 - mae: 0.0372 - mse: 0.0032 - val_loss: 0.0039 - val_mae: 0.0427 - val_mse: 0.0039

Epoch 20/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0031 - mae: 0.0372 - mse: 0.0031 - val_loss: 0.0039 - val_mae: 0.0397 - val_mse: 0.0039

Epoch 21/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0032 - mae: 0.0368 - mse: 0.0032 - val_loss: 0.0039 - val_mae: 0.0407 - val_mse: 0.0039

Epoch 22/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0029 - mae: 0.0358 - mse: 0.0029 - val_loss: 0.0038 - val_mae: 0.0393 - val_mse: 0.0038

Epoch 23/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0029 - mae: 0.0359 - mse: 0.0029 - val_loss: 0.0035 - val_mae: 0.0384 - val_mse: 0.0035

Epoch 24/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0029 - mae: 0.0352 - mse: 0.0029 - val_loss: 0.0037 - val_mae: 0.0403 - val_mse: 0.0037

Epoch 25/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0028 - mae: 0.0349 - mse: 0.0028 - val_loss: 0.0035 - val_mae: 0.0379 - val_mse: 0.0035

Epoch 26/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0029 - mae: 0.0351 - mse: 0.0029 - val_loss: 0.0039 - val_mae: 0.0395 - val_mse: 0.0039

Epoch 27/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0028 - mae: 0.0349 - mse: 0.0028 - val_loss: 0.0035 - val_mae: 0.0362 - val_mse: 0.0035

Epoch 28/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0027 - mae: 0.0342 - mse: 0.0027 - val_loss: 0.0042 - val_mae: 0.0387 - val_mse: 0.0042

Epoch 29/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0029 - mae: 0.0354 - mse: 0.0029 - val_loss: 0.0037 - val_mae: 0.0376 - val_mse: 0.0037

Epoch 30/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0027 - mae: 0.0344 - mse: 0.0027 - val_loss: 0.0037 - val_mae: 0.0397 - val_mse: 0.0037

Epoch 31/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0027 - mae: 0.0342 - mse: 0.0027 - val_loss: 0.0036 - val_mae: 0.0371 - val_mse: 0.0036

Epoch 32/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0026 - mae: 0.0339 - mse: 0.0026 - val_loss: 0.0037 - val_mae: 0.0365 - val_mse: 0.0037

Epoch 33/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0026 - mae: 0.0337 - mse: 0.0026 - val_loss: 0.0036 - val_mae: 0.0364 - val_mse: 0.0036

Epoch 34/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0024 - mae: 0.0326 - mse: 0.0024 - val_loss: 0.0036 - val_mae: 0.0373 - val_mse: 0.0036

Epoch 35/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0025 - mae: 0.0329 - mse: 0.0025 - val_loss: 0.0036 - val_mae: 0.0367 - val_mse: 0.0036

Epoch 36/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0024 - mae: 0.0321 - mse: 0.0024 - val_loss: 0.0036 - val_mae: 0.0363 - val_mse: 0.0036

Epoch 37/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0024 - mae: 0.0322 - mse: 0.0024 - val_loss: 0.0034 - val_mae: 0.0363 - val_mse: 0.0034

Epoch 38/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0024 - mae: 0.0323 - mse: 0.0024 - val_loss: 0.0036 - val_mae: 0.0354 - val_mse: 0.0036

Epoch 39/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0025 - mae: 0.0327 - mse: 0.0025 - val_loss: 0.0036 - val_mae: 0.0360 - val_mse: 0.0036

Epoch 40/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0023 - mae: 0.0319 - mse: 0.0023 - val_loss: 0.0034 - val_mae: 0.0360 - val_mse: 0.0034

Epoch 41/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0024 - mae: 0.0318 - mse: 0.0024 - val_loss: 0.0034 - val_mae: 0.0358 - val_mse: 0.0034

Epoch 42/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0023 - mae: 0.0314 - mse: 0.0023 - val_loss: 0.0036 - val_mae: 0.0366 - val_mse: 0.0036

Epoch 43/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0022 - mae: 0.0312 - mse: 0.0022 - val_loss: 0.0034 - val_mae: 0.0361 - val_mse: 0.0034

Epoch 44/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0022 - mae: 0.0312 - mse: 0.0022 - val_loss: 0.0035 - val_mae: 0.0354 - val_mse: 0.0035

Epoch 45/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0023 - mae: 0.0314 - mse: 0.0023 - val_loss: 0.0035 - val_mae: 0.0386 - val_mse: 0.0035

Epoch 46/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0022 - mae: 0.0310 - mse: 0.0022 - val_loss: 0.0035 - val_mae: 0.0359 - val_mse: 0.0035

Epoch 47/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0022 - mae: 0.0313 - mse: 0.0022 - val_loss: 0.0038 - val_mae: 0.0374 - val_mse: 0.0038

Epoch 48/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0022 - mae: 0.0308 - mse: 0.0022 - val_loss: 0.0034 - val_mae: 0.0350 - val_mse: 0.0034

Epoch 49/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0022 - mae: 0.0307 - mse: 0.0022 - val_loss: 0.0035 - val_mae: 0.0357 - val_mse: 0.0035

Epoch 50/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0021 - mae: 0.0305 - mse: 0.0021 - val_loss: 0.0035 - val_mae: 0.0381 - val_mse: 0.0035

Epoch 51/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0022 - mae: 0.0309 - mse: 0.0022 - val_loss: 0.0034 - val_mae: 0.0357 - val_mse: 0.0034

Epoch 52/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0021 - mae: 0.0304 - mse: 0.0021 - val_loss: 0.0034 - val_mae: 0.0347 - val_mse: 0.0034

Epoch 53/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0022 - mae: 0.0304 - mse: 0.0022 - val_loss: 0.0035 - val_mae: 0.0354 - val_mse: 0.0035

Epoch 54/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0021 - mae: 0.0301 - mse: 0.0021 - val_loss: 0.0033 - val_mae: 0.0366 - val_mse: 0.0033

Epoch 55/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0021 - mae: 0.0301 - mse: 0.0021 - val_loss: 0.0034 - val_mae: 0.0348 - val_mse: 0.0034

Epoch 56/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0019 - mae: 0.0288 - mse: 0.0019 - val_loss: 0.0033 - val_mae: 0.0347 - val_mse: 0.0033

Epoch 57/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0020 - mae: 0.0298 - mse: 0.0020 - val_loss: 0.0035 - val_mae: 0.0361 - val_mse: 0.0035

Epoch 58/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0021 - mae: 0.0299 - mse: 0.0021 - val_loss: 0.0034 - val_mae: 0.0353 - val_mse: 0.0034

Epoch 59/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0021 - mae: 0.0299 - mse: 0.0021 - val_loss: 0.0032 - val_mae: 0.0361 - val_mse: 0.0032

Epoch 60/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0021 - mae: 0.0296 - mse: 0.0021 - val_loss: 0.0033 - val_mae: 0.0344 - val_mse: 0.0033

Epoch 61/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0020 - mae: 0.0290 - mse: 0.0020 - val_loss: 0.0033 - val_mae: 0.0346 - val_mse: 0.0033

Epoch 62/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0020 - mae: 0.0297 - mse: 0.0020 - val_loss: 0.0033 - val_mae: 0.0351 - val_mse: 0.0033

Epoch 63/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0020 - mae: 0.0294 - mse: 0.0020 - val_loss: 0.0034 - val_mae: 0.0352 - val_mse: 0.0034

Epoch 64/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0020 - mae: 0.0294 - mse: 0.0020 - val_loss: 0.0034 - val_mae: 0.0360 - val_mse: 0.0034

Epoch 65/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0020 - mae: 0.0292 - mse: 0.0020 - val_loss: 0.0035 - val_mae: 0.0383 - val_mse: 0.0035

Epoch 66/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0020 - mae: 0.0295 - mse: 0.0020 - val_loss: 0.0034 - val_mae: 0.0348 - val_mse: 0.0034

Epoch 67/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0020 - mae: 0.0292 - mse: 0.0020 - val_loss: 0.0034 - val_mae: 0.0346 - val_mse: 0.0034

Epoch 68/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0019 - mae: 0.0287 - mse: 0.0019 - val_loss: 0.0033 - val_mae: 0.0347 - val_mse: 0.0033

Epoch 69/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0018 - mae: 0.0284 - mse: 0.0018 - val_loss: 0.0032 - val_mae: 0.0348 - val_mse: 0.0032

Epoch 70/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0019 - mae: 0.0289 - mse: 0.0019 - val_loss: 0.0034 - val_mae: 0.0355 - val_mse: 0.0034

Epoch 71/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0019 - mae: 0.0290 - mse: 0.0019 - val_loss: 0.0035 - val_mae: 0.0372 - val_mse: 0.0035

Epoch 72/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0019 - mae: 0.0284 - mse: 0.0019 - val_loss: 0.0034 - val_mae: 0.0343 - val_mse: 0.0034

Epoch 73/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0018 - mae: 0.0283 - mse: 0.0018 - val_loss: 0.0033 - val_mae: 0.0364 - val_mse: 0.0033

Epoch 74/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0019 - mae: 0.0289 - mse: 0.0019 - val_loss: 0.0033 - val_mae: 0.0364 - val_mse: 0.0033

Epoch 75/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0018 - mae: 0.0282 - mse: 0.0018 - val_loss: 0.0034 - val_mae: 0.0345 - val_mse: 0.0034

Epoch 76/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0019 - mae: 0.0285 - mse: 0.0019 - val_loss: 0.0033 - val_mae: 0.0344 - val_mse: 0.0033

Epoch 77/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0019 - mae: 0.0283 - mse: 0.0019 - val_loss: 0.0034 - val_mae: 0.0352 - val_mse: 0.0034

Epoch 78/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0018 - mae: 0.0278 - mse: 0.0018 - val_loss: 0.0034 - val_mae: 0.0355 - val_mse: 0.0034

Epoch 79/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0018 - mae: 0.0277 - mse: 0.0018 - val_loss: 0.0034 - val_mae: 0.0362 - val_mse: 0.0034

Epoch 80/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0017 - mae: 0.0277 - mse: 0.0017 - val_loss: 0.0033 - val_mae: 0.0361 - val_mse: 0.0033

Epoch 81/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0018 - mae: 0.0277 - mse: 0.0018 - val_loss: 0.0034 - val_mae: 0.0369 - val_mse: 0.0034

Epoch 82/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0018 - mae: 0.0282 - mse: 0.0018 - val_loss: 0.0034 - val_mae: 0.0352 - val_mse: 0.0034

Epoch 83/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0018 - mae: 0.0276 - mse: 0.0018 - val_loss: 0.0035 - val_mae: 0.0354 - val_mse: 0.0035

Epoch 84/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0018 - mae: 0.0282 - mse: 0.0018 - val_loss: 0.0034 - val_mae: 0.0343 - val_mse: 0.0034

Epoch 85/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0019 - mae: 0.0280 - mse: 0.0019 - val_loss: 0.0035 - val_mae: 0.0375 - val_mse: 0.0035

Epoch 86/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0017 - mae: 0.0273 - mse: 0.0017 - val_loss: 0.0035 - val_mae: 0.0352 - val_mse: 0.0035

Epoch 87/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0018 - mae: 0.0277 - mse: 0.0018 - val_loss: 0.0034 - val_mae: 0.0348 - val_mse: 0.0034

Epoch 88/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0017 - mae: 0.0273 - mse: 0.0017 - val_loss: 0.0033 - val_mae: 0.0346 - val_mse: 0.0033

Epoch 89/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.0018 - mae: 0.0276 - mse: 0.0018 - val_loss: 0.0034 - val_mae: 0.0354 - val_mse: 0.0034

y_pred = model.predict(X_test)

err=mean_squared_error(y_pred,y_test)

y_pred_ori = scaler.inverse_transform(y_pred)

y_test_ori = scaler.inverse_transform(y_test)

print(err)

print(y_pred_ori[:10])

print(y_test_ori[:10])

0.0023450270504444958

[[ 7.5200114]

[36.162247 ]

[-1.5508894]

[11.4153385]

[30.12201 ]

[ 4.0796814]

[ 2.721789 ]

[43.332848 ]

[23.49451 ]

[47.286026 ]]

[[10.]

[30.]

[ 0.]

[15.]

[15.]

[ 5.]

[ 5.]

[60.]

[10.]

[50.]]

diff = y_test_ori - y_pred_ori

plt.figure(figsize=(20, 10))

plt.plot(y_pred_ori, label="Prediction")

plt.plot(y_test_ori, label="True")

plt.plot(diff, label="Difference")

plt.ylabel('Waiting time')

plt.xlabel('Day')

plt.legend()

plt.savefig('DNN_regresion.png')

Training on category¶

in this case, we will pre-process the wait time into 3 different categories.

-

[1, 0, 0]: Wait time < 30 mins

-

[0, 1, 0]: Wait time >= 30 and <= 70

cat_vars=['Name', 'IsWeekend','IsHoliday','Hour modify', 'Weather']

num_vars=['Temperature', 'Pressure', 'Humidity', 'Cloud', 'Wind degree', ]

X_train,X_test,y_train,y_test = generate_training_data(train, 'Is busy', cat_vars=cat_vars, num_vars=num_vars, should_reshape=False)

print(y_train.shape)

print(X_train.shape)

(14328, 3)

(14328, 44)

early_stop = keras.callbacks.EarlyStopping(monitor='val_loss', patience=20)

model = keras.Sequential(

[

layers.Dense(64, activation="relu",

input_shape=(X_train.shape[1],),),

layers.Dense(512, activation="relu",

input_shape=(X_train.shape[1],),),

layers.Dropout(0.5),

layers.Dense(256, activation="relu",

input_shape=(X_train.shape[1],),),

layers.Dropout(0.5),

layers.Dense(128, activation="relu",

input_shape=(X_train.shape[1],),),

layers.Dense(y_train.shape[1], activation='softmax' ),

]

)

opt = keras.optimizers.Adam(learning_rate=0.001)

model.compile(loss='categorical_crossentropy',

optimizer=opt, metrics=['acc', 'mae', 'mse'])

history = model.fit(X_train, y_train, epochs=1000, callbacks=[early_stop], validation_split=0.2)

plot_history(history)

Epoch 1/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2155 - acc: 0.9014 - mae: 0.0904 - mse: 0.0453 - val_loss: 0.4462 - val_acc: 0.8472 - val_mae: 0.1254 - val_mse: 0.0757

Epoch 2/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2120 - acc: 0.9025 - mae: 0.0880 - mse: 0.0441 - val_loss: 0.4410 - val_acc: 0.8381 - val_mae: 0.1258 - val_mse: 0.0754

Epoch 3/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2112 - acc: 0.9035 - mae: 0.0883 - mse: 0.0443 - val_loss: 0.4480 - val_acc: 0.8416 - val_mae: 0.1229 - val_mse: 0.0752

Epoch 4/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2063 - acc: 0.9074 - mae: 0.0856 - mse: 0.0429 - val_loss: 0.4622 - val_acc: 0.8458 - val_mae: 0.1219 - val_mse: 0.0739

Epoch 5/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2055 - acc: 0.9060 - mae: 0.0859 - mse: 0.0430 - val_loss: 0.4529 - val_acc: 0.8423 - val_mae: 0.1225 - val_mse: 0.0752

Epoch 6/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.1968 - acc: 0.9093 - mae: 0.0822 - mse: 0.0413 - val_loss: 0.4663 - val_acc: 0.8458 - val_mae: 0.1188 - val_mse: 0.0763

Epoch 7/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2024 - acc: 0.9108 - mae: 0.0838 - mse: 0.0419 - val_loss: 0.5039 - val_acc: 0.8503 - val_mae: 0.1188 - val_mse: 0.0762

Epoch 8/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2025 - acc: 0.9101 - mae: 0.0841 - mse: 0.0419 - val_loss: 0.5043 - val_acc: 0.8486 - val_mae: 0.1139 - val_mse: 0.0758

Epoch 9/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2047 - acc: 0.9053 - mae: 0.0851 - mse: 0.0432 - val_loss: 0.4379 - val_acc: 0.8514 - val_mae: 0.1199 - val_mse: 0.0717

Epoch 10/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.1961 - acc: 0.9114 - mae: 0.0826 - mse: 0.0412 - val_loss: 0.4864 - val_acc: 0.8479 - val_mae: 0.1186 - val_mse: 0.0759

Epoch 11/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.1993 - acc: 0.9097 - mae: 0.0819 - mse: 0.0412 - val_loss: 0.4532 - val_acc: 0.8465 - val_mae: 0.1209 - val_mse: 0.0736

Epoch 12/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.1934 - acc: 0.9108 - mae: 0.0816 - mse: 0.0409 - val_loss: 0.4550 - val_acc: 0.8548 - val_mae: 0.1178 - val_mse: 0.0731

Epoch 13/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.1870 - acc: 0.9135 - mae: 0.0788 - mse: 0.0394 - val_loss: 0.4832 - val_acc: 0.8416 - val_mae: 0.1207 - val_mse: 0.0762

Epoch 14/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.1905 - acc: 0.9126 - mae: 0.0793 - mse: 0.0398 - val_loss: 0.5012 - val_acc: 0.8486 - val_mae: 0.1182 - val_mse: 0.0759

Epoch 15/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.1882 - acc: 0.9163 - mae: 0.0781 - mse: 0.0393 - val_loss: 0.5033 - val_acc: 0.8503 - val_mae: 0.1180 - val_mse: 0.0750

Epoch 16/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.1890 - acc: 0.9164 - mae: 0.0788 - mse: 0.0395 - val_loss: 0.4769 - val_acc: 0.8500 - val_mae: 0.1179 - val_mse: 0.0747

Epoch 17/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.1904 - acc: 0.9094 - mae: 0.0799 - mse: 0.0402 - val_loss: 0.5425 - val_acc: 0.8524 - val_mae: 0.1129 - val_mse: 0.0751

Epoch 18/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.1865 - acc: 0.9167 - mae: 0.0763 - mse: 0.0386 - val_loss: 0.4673 - val_acc: 0.8510 - val_mae: 0.1175 - val_mse: 0.0738

Epoch 19/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.1857 - acc: 0.9156 - mae: 0.0769 - mse: 0.0386 - val_loss: 0.4718 - val_acc: 0.8458 - val_mae: 0.1176 - val_mse: 0.0735

Epoch 20/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.1864 - acc: 0.9157 - mae: 0.0776 - mse: 0.0390 - val_loss: 0.5050 - val_acc: 0.8423 - val_mae: 0.1175 - val_mse: 0.0760

Epoch 21/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.1795 - acc: 0.9187 - mae: 0.0753 - mse: 0.0378 - val_loss: 0.4781 - val_acc: 0.8514 - val_mae: 0.1176 - val_mse: 0.0739

Epoch 22/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.1805 - acc: 0.9169 - mae: 0.0750 - mse: 0.0379 - val_loss: 0.4897 - val_acc: 0.8489 - val_mae: 0.1166 - val_mse: 0.0754

Epoch 23/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.1818 - acc: 0.9181 - mae: 0.0757 - mse: 0.0378 - val_loss: 0.5106 - val_acc: 0.8510 - val_mae: 0.1143 - val_mse: 0.0748

Epoch 24/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.1819 - acc: 0.9193 - mae: 0.0748 - mse: 0.0378 - val_loss: 0.5458 - val_acc: 0.8440 - val_mae: 0.1169 - val_mse: 0.0771

Epoch 25/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.1768 - acc: 0.9211 - mae: 0.0736 - mse: 0.0367 - val_loss: 0.5249 - val_acc: 0.8419 - val_mae: 0.1169 - val_mse: 0.0773

Epoch 26/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.1752 - acc: 0.9232 - mae: 0.0721 - mse: 0.0362 - val_loss: 0.5045 - val_acc: 0.8475 - val_mae: 0.1176 - val_mse: 0.0743

Epoch 27/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.1733 - acc: 0.9235 - mae: 0.0722 - mse: 0.0361 - val_loss: 0.5165 - val_acc: 0.8444 - val_mae: 0.1163 - val_mse: 0.0761

Epoch 28/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.1762 - acc: 0.9205 - mae: 0.0729 - mse: 0.0366 - val_loss: 0.4968 - val_acc: 0.8479 - val_mae: 0.1153 - val_mse: 0.0741

Epoch 29/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.1708 - acc: 0.9238 - mae: 0.0709 - mse: 0.0353 - val_loss: 0.5015 - val_acc: 0.8454 - val_mae: 0.1162 - val_mse: 0.0761

<Figure size 432x288 with 0 Axes>

y_pred = model.predict(X_test)

def print_acc(pred, true):

right = 0

for i in range(len(pred)):

p = np.argmax(pred[i])

t = np.argmax(true[i])

if p == t:

right += 1

print(f"acc: {right}/{len(pred)}, {right/len(pred)}")

print_acc(y_pred, y_test)

acc: 250/293, 0.8532423208191127

Training by using category¶

In this example, we will use average-wait-time to compute their waiting time's category

-

If wait-time is less than the average-wait time of that facility, then it is not busy.

-

If wait-time is around average wait-time, then it is in a normal situation

-

If wait-time is above average-wait-time, then it is busy.

In this model, we are basically assume facilities are different. Some will have more popularity than others. And we will use the model to predict whether a model is above its average or not.

def is_busy_2(row):

if row[3] < row[1] - 5:

return [1, 0, 0]

elif row[3] >= row[1] - 5 and row[3] <= row[1] + 5:

return [0, 1, 0]

else:

return [0, 0, 1]

def calculate_average_wait_time(df: pd.DataFrame)-> pd.DataFrame:

new_df = df.copy()

new_df2 = df.copy()

new_df['tmp_date'] = new_df['Time'].apply(lambda x: x.date())

new_df = new_df[['tmp_date', 'Wait time', 'Name']]

new_df = new_df.groupby(['Name']).mean()

new_df = new_df.rename(columns={'Wait time': 'Average wait time'})

new_df = new_df.merge(new_df2, on='Name', how='right')

return new_df

average_sampled_train = calculate_average_wait_time(train)

average_sampled_train['Is busy'] = average_sampled_train.apply(is_busy_2, axis=1)

average_sampled_train.sample(20)

| Name | Average wait time | Time | Wait time | Weather | Temperature | Max temperature | Min temperature | Pressure | Humidity | Wind degree | Wind speed | Cloud | id | IsWeekend | IsHoliday | Hour modify | Is busy | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 6302 | Dumbo the Flying Elephant | 24.476950 | 2020-10-03 17:19:47.471631 | 40.0 | Clouds | 23.73 | 24.00 | 24.00 | 1012 | 78 | 90.0 | 6.0 | 75 | 12 | 1 | 1 | Evening | [0, 0, 1] |

| 10102 | Siren's Revenge | 5.000000 | 2020-10-21 13:22:34.657099 | 5.0 | Clouds | 18.36 | 19.44 | 19.44 | 1018 | 88 | 340.0 | 5.0 | 90 | 18 | 0 | 0 | Afternoon | [0, 1, 0] |

| 5149 | The Many Adventures of Winnie the Pooh | 21.727129 | 2020-10-08 13:17:12.792022 | 20.0 | Clouds | 23.83 | 25.00 | 25.00 | 1022 | 53 | 20.0 | 9.0 | 75 | 10 | 0 | 1 | Afternoon | [0, 1, 0] |

| 8802 | Jet Packs | 34.943639 | 2020-10-17 13:21:49.642192 | 40.0 | Clouds | 21.54 | 22.00 | 22.00 | 1023 | 56 | 10.0 | 5.0 | 20 | 16 | 1 | 0 | Afternoon | [0, 0, 1] |

| 12292 | Rex’s Racer | 56.937984 | 2020-09-04 13:17:48.730671 | 90.0 | Clouds | 33.22 | 36.67 | 36.67 | 1012 | 45 | 170.0 | 6.0 | 91 | 28 | 0 | 0 | Afternoon | [0, 0, 1] |

| 4527 | Seven Dwarfs Mine Train | 52.788310 | 2020-10-09 11:21:36.066646 | 45.0 | Clouds | 23.20 | 24.00 | 24.00 | 1021 | 53 | 10.0 | 6.0 | 23 | 9 | 0 | 0 | Morning | [1, 0, 0] |

| 13143 | Slinky Dog Spin | 24.613601 | 2020-09-22 16:21:22.856577 | 20.0 | Clouds | 24.80 | 25.00 | 25.00 | 1014 | 61 | 60.0 | 6.0 | 40 | 29 | 0 | 0 | Evening | [0, 1, 0] |

| 5309 | The Many Adventures of Winnie the Pooh | 21.727129 | 2020-10-22 17:23:25.739682 | 40.0 | Clouds | 19.46 | 20.00 | 20.00 | 1016 | 68 | 10.0 | 8.0 | 40 | 10 | 0 | 0 | Evening | [0, 0, 1] |

| 5307 | The Many Adventures of Winnie the Pooh | 21.727129 | 2020-10-22 15:23:48.253335 | 10.0 | Clouds | 22.51 | 23.33 | 23.33 | 1016 | 56 | 340.0 | 5.0 | 13 | 10 | 0 | 0 | Afternoon | [1, 0, 0] |

| 12042 | Roaring Rapids | 31.629555 | 2020-09-25 21:18:18.834521 | 5.0 | Rain | 22.66 | 23.00 | 23.00 | 1014 | 69 | 10.0 | 5.0 | 100 | 23 | 0 | 0 | Night | [1, 0, 0] |

| 5539 | Voyage to the Crystal Grotto | 17.301459 | 2020-09-18 17:18:57.544340 | 40.0 | Clouds | 19.43 | 20.00 | 20.00 | 1018 | 88 | 270.0 | 3.0 | 40 | 11 | 0 | 0 | Evening | [0, 0, 1] |

| 11842 | Roaring Rapids | 31.629555 | 2020-09-08 14:21:00.395020 | 20.0 | Clouds | 33.61 | 35.00 | 35.00 | 1010 | 49 | 180.0 | 8.0 | 20 | 23 | 0 | 0 | Afternoon | [1, 0, 0] |

| 6323 | Dumbo the Flying Elephant | 24.476950 | 2020-10-05 17:20:52.785435 | 30.0 | Clouds | 20.67 | 21.11 | 21.11 | 1020 | 52 | 10.0 | 8.0 | 36 | 12 | 0 | 1 | Evening | [0, 0, 1] |

| 11943 | Roaring Rapids | 31.629555 | 2020-09-17 16:20:41.428588 | 25.0 | Rain | 21.05 | 21.67 | 21.67 | 1010 | 100 | 350.0 | 7.0 | 75 | 23 | 0 | 0 | Evening | [1, 0, 0] |

| 5281 | The Many Adventures of Winnie the Pooh | 21.727129 | 2020-10-20 10:24:19.155382 | 20.0 | Clouds | 21.59 | 22.00 | 22.00 | 1025 | 53 | 50.0 | 6.0 | 40 | 10 | 0 | 0 | Morning | [0, 1, 0] |

| 9435 | Buzz Lightyear Planet Rescue | 7.097039 | 2020-10-19 15:24:32.051678 | 10.0 | Clouds | 21.24 | 23.33 | 23.33 | 1023 | 56 | 30.0 | 5.0 | 40 | 17 | 0 | 0 | Afternoon | [0, 1, 0] |

| 1890 | “Once Upon a Time” Adventure | 7.246127 | 2020-09-17 10:21:16.322591 | 5.0 | Rain | 22.64 | 23.00 | 23.00 | 1010 | 88 | 40.0 | 7.0 | 75 | 4 | 0 | 0 | Morning | [0, 1, 0] |

| 9942 | Siren's Revenge | 5.000000 | 2020-10-07 17:21:19.897009 | 5.0 | Clouds | 21.37 | 22.00 | 22.00 | 1021 | 56 | 20.0 | 8.0 | 20 | 18 | 0 | 1 | Evening | [0, 1, 0] |

| 2997 | Hunny Pot Spin | 9.258114 | 2020-09-12 09:22:28.558742 | 5.0 | Clouds | 26.08 | 27.78 | 27.78 | 1013 | 78 | 330.0 | 3.0 | 20 | 7 | 1 | 0 | Morning | [0, 1, 0] |

| 13470 | Slinky Dog Spin | 24.613601 | 2020-10-19 12:25:41.694352 | 40.0 | Clouds | 20.87 | 22.78 | 22.78 | 1024 | 56 | 20.0 | 6.0 | 40 | 29 | 0 | 0 | Afternoon | [0, 0, 1] |

cat_vars=['Name', 'IsWeekend','IsHoliday','Hour modify', 'Weather']

num_vars=['Temperature', 'Pressure', 'Humidity', 'Cloud', 'Wind degree', ]

X_train,X_test,y_train,y_test = generate_training_data(average_sampled_train, 'Is busy', cat_vars=cat_vars, num_vars=num_vars, should_reshape=False)

early_stop = keras.callbacks.EarlyStopping(monitor='val_loss', patience=20)

model = keras.Sequential(

[

layers.Dense(64, activation="relu",

input_shape=(X_train.shape[1],),),

layers.Dense(512, activation="relu",

input_shape=(X_train.shape[1],),),

layers.Dropout(0.5),

layers.Dense(256, activation="relu",

input_shape=(X_train.shape[1],),),

layers.Dropout(0.5),

# layers.Dense(512, activation="relu",

# input_shape=(X_train.shape[1],),),

# layers.Dropout(0.5),

# layers.Dense(512, activation="relu",

# input_shape=(X_train.shape[1],),),

# layers.Dropout(0.5),

# layers.Dense(256, activation="relu",

# input_shape=(X_train.shape[1],),),

# layers.Dropout(0.5),

layers.Dense(128, activation="relu",

input_shape=(X_train.shape[1],),),

layers.Dense(y_train.shape[1], activation='softmax' ),

]

)

opt = keras.optimizers.Adam(learning_rate=0.001)

model.compile(loss='categorical_crossentropy',

optimizer=opt, metrics=['acc', 'mae', 'mse'])

history = model.fit(X_train, y_train, epochs=1000, callbacks=[early_stop], validation_split=0.2)

plot_history(history)

Epoch 1/1000

359/359 [==============================] - 1s 4ms/step - loss: 0.5742 - acc: 0.7383 - mae: 0.2304 - mse: 0.1161 - val_loss: 0.4259 - val_acc: 0.8102 - val_mae: 0.1846 - val_mse: 0.0883

Epoch 2/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.4323 - acc: 0.7988 - mae: 0.1772 - mse: 0.0895 - val_loss: 0.4156 - val_acc: 0.8123 - val_mae: 0.1713 - val_mse: 0.0858

Epoch 3/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.4067 - acc: 0.8137 - mae: 0.1683 - mse: 0.0848 - val_loss: 0.4012 - val_acc: 0.8158 - val_mae: 0.1741 - val_mse: 0.0836

Epoch 4/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.3872 - acc: 0.8274 - mae: 0.1599 - mse: 0.0805 - val_loss: 0.3918 - val_acc: 0.8266 - val_mae: 0.1623 - val_mse: 0.0805

Epoch 5/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.3733 - acc: 0.8318 - mae: 0.1553 - mse: 0.0777 - val_loss: 0.3877 - val_acc: 0.8200 - val_mae: 0.1607 - val_mse: 0.0801

Epoch 6/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.3590 - acc: 0.8396 - mae: 0.1489 - mse: 0.0750 - val_loss: 0.3819 - val_acc: 0.8248 - val_mae: 0.1600 - val_mse: 0.0793

Epoch 7/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.3567 - acc: 0.8396 - mae: 0.1478 - mse: 0.0742 - val_loss: 0.3821 - val_acc: 0.8287 - val_mae: 0.1570 - val_mse: 0.0788

Epoch 8/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.3472 - acc: 0.8437 - mae: 0.1445 - mse: 0.0725 - val_loss: 0.3714 - val_acc: 0.8339 - val_mae: 0.1493 - val_mse: 0.0763

Epoch 9/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.3415 - acc: 0.8475 - mae: 0.1421 - mse: 0.0711 - val_loss: 0.3853 - val_acc: 0.8308 - val_mae: 0.1499 - val_mse: 0.0786

Epoch 10/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.3323 - acc: 0.8504 - mae: 0.1386 - mse: 0.0694 - val_loss: 0.3726 - val_acc: 0.8374 - val_mae: 0.1404 - val_mse: 0.0752

Epoch 11/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.3264 - acc: 0.8540 - mae: 0.1360 - mse: 0.0681 - val_loss: 0.3828 - val_acc: 0.8381 - val_mae: 0.1456 - val_mse: 0.0760

Epoch 12/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.3233 - acc: 0.8567 - mae: 0.1334 - mse: 0.0671 - val_loss: 0.3683 - val_acc: 0.8353 - val_mae: 0.1471 - val_mse: 0.0748

Epoch 13/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.3184 - acc: 0.8574 - mae: 0.1322 - mse: 0.0663 - val_loss: 0.3639 - val_acc: 0.8454 - val_mae: 0.1422 - val_mse: 0.0734

Epoch 14/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.3033 - acc: 0.8622 - mae: 0.1269 - mse: 0.0631 - val_loss: 0.3802 - val_acc: 0.8398 - val_mae: 0.1372 - val_mse: 0.0756

Epoch 15/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.3044 - acc: 0.8618 - mae: 0.1260 - mse: 0.0634 - val_loss: 0.3642 - val_acc: 0.8391 - val_mae: 0.1368 - val_mse: 0.0728

Epoch 16/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2985 - acc: 0.8649 - mae: 0.1247 - mse: 0.0624 - val_loss: 0.3836 - val_acc: 0.8364 - val_mae: 0.1338 - val_mse: 0.0762

Epoch 17/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2926 - acc: 0.8694 - mae: 0.1215 - mse: 0.0611 - val_loss: 0.3739 - val_acc: 0.8423 - val_mae: 0.1384 - val_mse: 0.0740

Epoch 18/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2936 - acc: 0.8684 - mae: 0.1221 - mse: 0.0611 - val_loss: 0.3752 - val_acc: 0.8385 - val_mae: 0.1329 - val_mse: 0.0732

Epoch 19/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2824 - acc: 0.8709 - mae: 0.1180 - mse: 0.0591 - val_loss: 0.3726 - val_acc: 0.8437 - val_mae: 0.1309 - val_mse: 0.0733

Epoch 20/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2793 - acc: 0.8775 - mae: 0.1155 - mse: 0.0581 - val_loss: 0.3680 - val_acc: 0.8378 - val_mae: 0.1411 - val_mse: 0.0732

Epoch 21/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2773 - acc: 0.8749 - mae: 0.1157 - mse: 0.0577 - val_loss: 0.3865 - val_acc: 0.8451 - val_mae: 0.1316 - val_mse: 0.0735

Epoch 22/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2718 - acc: 0.8777 - mae: 0.1130 - mse: 0.0566 - val_loss: 0.3870 - val_acc: 0.8398 - val_mae: 0.1293 - val_mse: 0.0748

Epoch 23/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2668 - acc: 0.8810 - mae: 0.1110 - mse: 0.0558 - val_loss: 0.3765 - val_acc: 0.8465 - val_mae: 0.1308 - val_mse: 0.0730

Epoch 24/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2646 - acc: 0.8838 - mae: 0.1099 - mse: 0.0550 - val_loss: 0.3926 - val_acc: 0.8364 - val_mae: 0.1301 - val_mse: 0.0740

Epoch 25/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2581 - acc: 0.8841 - mae: 0.1073 - mse: 0.0538 - val_loss: 0.3855 - val_acc: 0.8493 - val_mae: 0.1284 - val_mse: 0.0728

Epoch 26/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2576 - acc: 0.8854 - mae: 0.1070 - mse: 0.0537 - val_loss: 0.3911 - val_acc: 0.8440 - val_mae: 0.1263 - val_mse: 0.0743

Epoch 27/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2541 - acc: 0.8856 - mae: 0.1053 - mse: 0.0531 - val_loss: 0.3986 - val_acc: 0.8447 - val_mae: 0.1278 - val_mse: 0.0740

Epoch 28/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2489 - acc: 0.8878 - mae: 0.1038 - mse: 0.0521 - val_loss: 0.4028 - val_acc: 0.8447 - val_mae: 0.1259 - val_mse: 0.0744

Epoch 29/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2455 - acc: 0.8897 - mae: 0.1019 - mse: 0.0513 - val_loss: 0.4089 - val_acc: 0.8468 - val_mae: 0.1248 - val_mse: 0.0732

Epoch 30/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2400 - acc: 0.8934 - mae: 0.1000 - mse: 0.0500 - val_loss: 0.4043 - val_acc: 0.8409 - val_mae: 0.1237 - val_mse: 0.0741

Epoch 31/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2405 - acc: 0.8936 - mae: 0.0993 - mse: 0.0499 - val_loss: 0.3976 - val_acc: 0.8430 - val_mae: 0.1284 - val_mse: 0.0748

Epoch 32/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2382 - acc: 0.8943 - mae: 0.0989 - mse: 0.0496 - val_loss: 0.4170 - val_acc: 0.8402 - val_mae: 0.1280 - val_mse: 0.0755

Epoch 33/1000

359/359 [==============================] - 1s 3ms/step - loss: 0.2382 - acc: 0.8934 - mae: 0.0989 - mse: 0.0498 - val_loss: 0.3866 - val_acc: 0.8461 - val_mae: 0.1285 - val_mse: 0.0718

<Figure size 432x288 with 0 Axes>

y_pred = model.predict(X_test)

print_acc(y_pred, y_test)

acc: 243/293, 0.8293515358361775

LSTM Training¶

Window generator¶

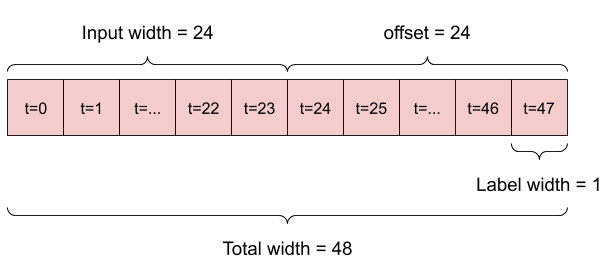

To make a single prediction 24h into the future, given 24h of history you might define a window like this:

class WindowGenerator():

def __init__(self, input_width, offset, data, train_split):

self.data = data

self.input_width = input_width

self.offset = offset

self.train_split = train_split

def to_sequences(self):

"""

Return both data and label

"""

data_len = len(self.data)

ret = []

ret_label = []

for i in range(data_len - self.offset - self.input_width + 1):

tmp = self.data[i : i + self.input_width ]

tmp_label = self.data[i + self.input_width + self.offset - 1]

ret.append(tmp)

ret_label.append(tmp_label)

return np.array(ret), np.array(ret_label)

def split(self):

x, y = self.to_sequences()

num_train = int((1 - self.train_split) * x.shape[0])

X_train = x[:num_train]

y_train = y[:num_train]

X_test = x[num_train:]

y_test = y[num_train:]

return X_train, y_train, X_test, y_test

SEQ_LEN = 10

cat_vars=['IsWeekend','IsHoliday']

num_vars=['Temperature', 'Pressure', 'Humidity', 'Cloud', 'Wind degree' ]

from tensorflow.keras.layers import Bidirectional, Dropout, LSTM, Dense, Activation

Preprocess data¶

average_sampled_train['temp_date'] = average_sampled_train['Time'].apply(lambda x: x.date())

grouped_average = average_sampled_train.groupby('temp_date').mean()

grouped_average = grouped_average[['Temperature', 'Max temperature', 'Min temperature', 'Wind degree', 'Humidity' , 'Wind speed', 'Cloud', 'IsWeekend', 'IsHoliday', "Wait time", "Pressure"]]

grouped_average.columns

Index(['Temperature', 'Max temperature', 'Min temperature', 'Wind degree',

'Humidity', 'Wind speed', 'Cloud', 'IsWeekend', 'IsHoliday',

'Wait time', 'Pressure'],

dtype='object')

numeric_transformer=Pipeline(steps=[

('scaler', RobustScaler())])

categorical_transformer=Pipeline(steps=[

('oneHot',OneHotEncoder(sparse=False))])

preprocessor=ColumnTransformer(transformers=[

('num',numeric_transformer,num_vars),

('cat',categorical_transformer,cat_vars)])

data_transformed=preprocessor.fit_transform(grouped_average)

print(data_transformed.shape)

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

/usr/local/lib/python3.6/dist-packages/sklearn/utils/__init__.py in _get_column_indices(X, key)

462 try:

--> 463 column_indices = [all_columns.index(col) for col in columns]

464 except ValueError as e:

/usr/local/lib/python3.6/dist-packages/sklearn/utils/__init__.py in <listcomp>(.0)

462 try:

--> 463 column_indices = [all_columns.index(col) for col in columns]

464 except ValueError as e:

ValueError: 'Name' is not in list

The above exception was the direct cause of the following exception:

ValueError Traceback (most recent call last)

<ipython-input-38-c2d6048db054> in <module>()

8 ('cat',categorical_transformer,cat_vars)])

9

---> 10 data_transformed=preprocessor.fit_transform(grouped_average)

11 print(data_transformed.shape)

/usr/local/lib/python3.6/dist-packages/sklearn/compose/_column_transformer.py in fit_transform(self, X, y)

514 self._validate_transformers()

515 self._validate_column_callables(X)

--> 516 self._validate_remainder(X)

517

518 result = self._fit_transform(X, y, _fit_transform_one)

/usr/local/lib/python3.6/dist-packages/sklearn/compose/_column_transformer.py in _validate_remainder(self, X)

322 cols = []

323 for columns in self._columns:

--> 324 cols.extend(_get_column_indices(X, columns))

325 remaining_idx = list(set(range(self._n_features)) - set(cols))

326 remaining_idx = sorted(remaining_idx) or None

/usr/local/lib/python3.6/dist-packages/sklearn/utils/__init__.py in _get_column_indices(X, key)

466 raise ValueError(

467 "A given column is not a column of the dataframe"

--> 468 ) from e

469 raise

470

ValueError: A given column is not a column of the dataframe

wg = WindowGenerator(data=data_transformed, input_width=SEQ_LEN, offset=0, train_split=0.1)

wg_2 = WindowGenerator(data=grouped_average['Wait time'].to_numpy(), input_width=SEQ_LEN, offset=0, train_split=0.1)

X_train, _, X_test, _ = wg.split()

_, y_train, _, y_test = wg_2.split()

print(X_train.shape)

print(y_train.shape)

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

<ipython-input-39-3e9d8c3f67d6> in <module>()

----> 1 wg = WindowGenerator(data=data_transformed, input_width=SEQ_LEN, offset=0, train_split=0.1)

2 wg_2 = WindowGenerator(data=grouped_average['Wait time'].to_numpy(), input_width=SEQ_LEN, offset=0, train_split=0.1)

3 X_train, _, X_test, _ = wg.split()

4 _, y_train, _, y_test = wg_2.split()

5 print(X_train.shape)

NameError: name 'WindowGenerator' is not defined

WINDOW_SIZE = SEQ_LEN

model = keras.Sequential()

# Input layer

model.add(Bidirectional(LSTM(WINDOW_SIZE, return_sequences=True), input_shape=(WINDOW_SIZE, X_train.shape[-1])))

"""Bidirectional RNNs allows to train on the sequence data in forward and backward direction."""

model.add(Dropout(rate=0.2))

# 1st Hidden layer

model.add(Bidirectional(LSTM((WINDOW_SIZE * 2), return_sequences = True)))

model.add(Dropout(rate=0.2))

# 2nd Hidden layer

model.add(Bidirectional(LSTM(WINDOW_SIZE, return_sequences=False)))

# output layer

model.add(Dense(units=1))

model.add(Activation('linear'))

model.compile(loss='mean_squared_error', optimizer='adam', metrics=['mae', 'mse'])

model.summary()

history = model.fit(X_train, y_train, epochs=1000, shuffle=False, validation_split=0.1, callbacks=[early_stop])

Epoch 1/1000

403/403 [==============================] - 1s 3ms/step - loss: 0.2527 - acc: 0.8907 - mae: 0.1016 - mse: 0.0513 - val_loss: 0.3764 - val_acc: 0.8332 - val_mae: 0.1546 - val_mse: 0.0773

Epoch 2/1000

403/403 [==============================] - 1s 3ms/step - loss: 0.2506 - acc: 0.8893 - mae: 0.1032 - mse: 0.0517 - val_loss: 0.3732 - val_acc: 0.8353 - val_mae: 0.1477 - val_mse: 0.0752

Epoch 3/1000

403/403 [==============================] - 1s 3ms/step - loss: 0.2428 - acc: 0.8924 - mae: 0.1001 - mse: 0.0501 - val_loss: 0.3711 - val_acc: 0.8276 - val_mae: 0.1433 - val_mse: 0.0750

Epoch 4/1000

403/403 [==============================] - 1s 3ms/step - loss: 0.2407 - acc: 0.8952 - mae: 0.0993 - mse: 0.0495 - val_loss: 0.3701 - val_acc: 0.8332 - val_mae: 0.1468 - val_mse: 0.0752

Epoch 5/1000

403/403 [==============================] - 1s 3ms/step - loss: 0.2404 - acc: 0.8929 - mae: 0.0995 - mse: 0.0496 - val_loss: 0.3691 - val_acc: 0.8409 - val_mae: 0.1387 - val_mse: 0.0740

Epoch 6/1000

403/403 [==============================] - 1s 3ms/step - loss: 0.2375 - acc: 0.8962 - mae: 0.0976 - mse: 0.0488 - val_loss: 0.3705 - val_acc: 0.8367 - val_mae: 0.1407 - val_mse: 0.0743

Epoch 7/1000

403/403 [==============================] - 1s 3ms/step - loss: 0.2360 - acc: 0.8923 - mae: 0.0981 - mse: 0.0491 - val_loss: 0.3755 - val_acc: 0.8360 - val_mae: 0.1370 - val_mse: 0.0748

Epoch 8/1000

403/403 [==============================] - 1s 3ms/step - loss: 0.2347 - acc: 0.8962 - mae: 0.0964 - mse: 0.0482 - val_loss: 0.3740 - val_acc: 0.8451 - val_mae: 0.1411 - val_mse: 0.0746

Epoch 9/1000

403/403 [==============================] - 1s 3ms/step - loss: 0.2283 - acc: 0.8962 - mae: 0.0954 - mse: 0.0478 - val_loss: 0.3884 - val_acc: 0.8353 - val_mae: 0.1369 - val_mse: 0.0751

Epoch 10/1000

403/403 [==============================] - 1s 3ms/step - loss: 0.2257 - acc: 0.9017 - mae: 0.0935 - mse: 0.0469 - val_loss: 0.3705 - val_acc: 0.8395 - val_mae: 0.1339 - val_mse: 0.0737

Epoch 11/1000

403/403 [==============================] - 1s 3ms/step - loss: 0.2231 - acc: 0.8997 - mae: 0.0924 - mse: 0.0463 - val_loss: 0.3835 - val_acc: 0.8416 - val_mae: 0.1296 - val_mse: 0.0732

Epoch 12/1000

403/403 [==============================] - 1s 3ms/step - loss: 0.2272 - acc: 0.8972 - mae: 0.0946 - mse: 0.0475 - val_loss: 0.3905 - val_acc: 0.8430 - val_mae: 0.1307 - val_mse: 0.0745

Epoch 13/1000

403/403 [==============================] - 1s 3ms/step - loss: 0.2156 - acc: 0.9003 - mae: 0.0904 - mse: 0.0453 - val_loss: 0.3855 - val_acc: 0.8465 - val_mae: 0.1280 - val_mse: 0.0729

Epoch 14/1000

403/403 [==============================] - 1s 3ms/step - loss: 0.2194 - acc: 0.9041 - mae: 0.0901 - mse: 0.0452 - val_loss: 0.3853 - val_acc: 0.8381 - val_mae: 0.1290 - val_mse: 0.0740

Epoch 15/1000

403/403 [==============================] - 1s 3ms/step - loss: 0.2144 - acc: 0.9045 - mae: 0.0893 - mse: 0.0446 - val_loss: 0.3937 - val_acc: 0.8472 - val_mae: 0.1296 - val_mse: 0.0745

Epoch 16/1000

403/403 [==============================] - 1s 3ms/step - loss: 0.2119 - acc: 0.9065 - mae: 0.0874 - mse: 0.0438 - val_loss: 0.3989 - val_acc: 0.8458 - val_mae: 0.1282 - val_mse: 0.0749

Epoch 17/1000

403/403 [==============================] - 1s 3ms/step - loss: 0.2132 - acc: 0.9031 - mae: 0.0889 - mse: 0.0446 - val_loss: 0.4087 - val_acc: 0.8458 - val_mae: 0.1235 - val_mse: 0.0749

Epoch 18/1000

403/403 [==============================] - 1s 3ms/step - loss: 0.2098 - acc: 0.9048 - mae: 0.0872 - mse: 0.0438 - val_loss: 0.3939 - val_acc: 0.8395 - val_mae: 0.1307 - val_mse: 0.0753

Epoch 19/1000

403/403 [==============================] - 1s 3ms/step - loss: 0.2060 - acc: 0.9079 - mae: 0.0858 - mse: 0.0429 - val_loss: 0.3988 - val_acc: 0.8493 - val_mae: 0.1234 - val_mse: 0.0736

Epoch 20/1000

403/403 [==============================] - 1s 3ms/step - loss: 0.2082 - acc: 0.9059 - mae: 0.0865 - mse: 0.0435 - val_loss: 0.4140 - val_acc: 0.8507 - val_mae: 0.1226 - val_mse: 0.0741

Epoch 21/1000

403/403 [==============================] - 1s 3ms/step - loss: 0.2008 - acc: 0.9093 - mae: 0.0833 - mse: 0.0419 - val_loss: 0.4228 - val_acc: 0.8388 - val_mae: 0.1248 - val_mse: 0.0767

Epoch 22/1000

403/403 [==============================] - 1s 3ms/step - loss: 0.2061 - acc: 0.9080 - mae: 0.0849 - mse: 0.0426 - val_loss: 0.4233 - val_acc: 0.8500 - val_mae: 0.1232 - val_mse: 0.0751

Epoch 23/1000

403/403 [==============================] - 1s 3ms/step - loss: 0.2051 - acc: 0.9044 - mae: 0.0854 - mse: 0.0431 - val_loss: 0.4062 - val_acc: 0.8493 - val_mae: 0.1255 - val_mse: 0.0747

Epoch 24/1000

21/403 [>.............................] - ETA: 0s - loss: 0.2305 - acc: 0.9033 - mae: 0.0996 - mse: 0.0482

---------------------------------------------------------------------------

KeyboardInterrupt Traceback (most recent call last)

<ipython-input-40-52ca1206cf0b> in <module>()

----> 1 history = model.fit(X_train, y_train, epochs=1000, shuffle=False, validation_split=0.1, callbacks=[early_stop])

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/engine/training.py in _method_wrapper(self, *args, **kwargs)

106 def _method_wrapper(self, *args, **kwargs):

107 if not self._in_multi_worker_mode(): # pylint: disable=protected-access

--> 108 return method(self, *args, **kwargs)

109

110 # Running inside `run_distribute_coordinator` already.

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/engine/training.py in fit(self, x, y, batch_size, epochs, verbose, callbacks, validation_split, validation_data, shuffle, class_weight, sample_weight, initial_epoch, steps_per_epoch, validation_steps, validation_batch_size, validation_freq, max_queue_size, workers, use_multiprocessing)

1096 batch_size=batch_size):

1097 callbacks.on_train_batch_begin(step)

-> 1098 tmp_logs = train_function(iterator)

1099 if data_handler.should_sync:

1100 context.async_wait()

/usr/local/lib/python3.6/dist-packages/tensorflow/python/eager/def_function.py in __call__(self, *args, **kwds)

778 else:

779 compiler = "nonXla"

--> 780 result = self._call(*args, **kwds)

781

782 new_tracing_count = self._get_tracing_count()

/usr/local/lib/python3.6/dist-packages/tensorflow/python/eager/def_function.py in _call(self, *args, **kwds)

805 # In this case we have created variables on the first call, so we run the

806 # defunned version which is guaranteed to never create variables.

--> 807 return self._stateless_fn(*args, **kwds) # pylint: disable=not-callable

808 elif self._stateful_fn is not None:

809 # Release the lock early so that multiple threads can perform the call

/usr/local/lib/python3.6/dist-packages/tensorflow/python/eager/function.py in __call__(self, *args, **kwargs)

2827 with self._lock:

2828 graph_function, args, kwargs = self._maybe_define_function(args, kwargs)

-> 2829 return graph_function._filtered_call(args, kwargs) # pylint: disable=protected-access

2830

2831 @property

/usr/local/lib/python3.6/dist-packages/tensorflow/python/eager/function.py in _filtered_call(self, args, kwargs, cancellation_manager)

1846 resource_variable_ops.BaseResourceVariable))],

1847 captured_inputs=self.captured_inputs,

-> 1848 cancellation_manager=cancellation_manager)

1849

1850 def _call_flat(self, args, captured_inputs, cancellation_manager=None):

/usr/local/lib/python3.6/dist-packages/tensorflow/python/eager/function.py in _call_flat(self, args, captured_inputs, cancellation_manager)

1922 # No tape is watching; skip to running the function.

1923 return self._build_call_outputs(self._inference_function.call(

-> 1924 ctx, args, cancellation_manager=cancellation_manager))

1925 forward_backward = self._select_forward_and_backward_functions(

1926 args,

/usr/local/lib/python3.6/dist-packages/tensorflow/python/eager/function.py in call(self, ctx, args, cancellation_manager)

548 inputs=args,

549 attrs=attrs,

--> 550 ctx=ctx)

551 else:

552 outputs = execute.execute_with_cancellation(

/usr/local/lib/python3.6/dist-packages/tensorflow/python/eager/execute.py in quick_execute(op_name, num_outputs, inputs, attrs, ctx, name)

58 ctx.ensure_initialized()

59 tensors = pywrap_tfe.TFE_Py_Execute(ctx._handle, device_name, op_name,

---> 60 inputs, attrs, num_outputs)

61 except core._NotOkStatusException as e:

62 if name is not None:

KeyboardInterrupt:

plot_history(history)

y_pred = model.predict(X_test)

print()

print(y_test[:10])

plt.plot(y_test)

plt.plot(y_pred.flatten())

[15.10416667 25.24390244 20.91836735 37.47126437 19.53846154]

[<matplotlib.lines.Line2D at 0x7f4ce57342e8>]

Conclusion¶

Because we are using averge daily data for LSTM model, we found that it may not be able to predict accurate results based on the current volume of dataset. However, DNN model will provide good prediction for both category results as well as regression results